Computers are smart and, thanks to artificial neural networks, they are getting smarter. These networks, modelled after real neurological systems, allow computers to complete complex tasks such as image recognition. Until now, they have been impressively tough to fool. But one group of researchers claims to have found a way to reliably trick these networks into getting it wrong.

Optical Illusions for Neural Networks?

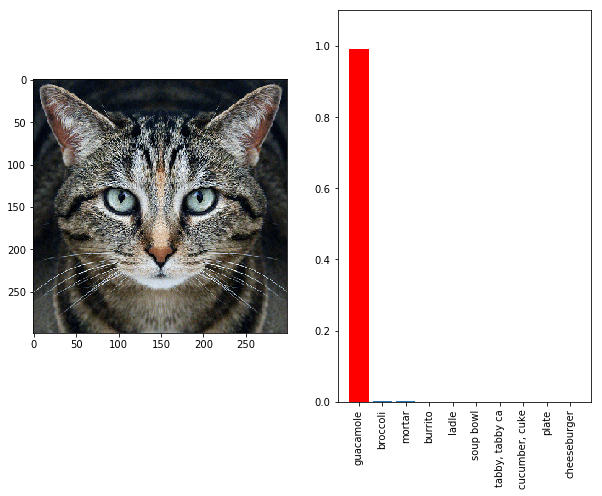

Pictures designed to trick image recognition networks are called "adversarial". That is, they are designed to look like one thing to us, and - through subtle modifications - to look like something entirely different to the network, creating a conflict. Take the example below:

We can clearly see that this is a cat. But by carefully "perturbing" the image, the team were able to convince Google's InceptionV3 image classifier that it was in fact guacamole. So far so good, but the trick only works from this specific angle. Rotate the image even slightly and the network correctly recognizes it as a cat.

The researchers, a team of MIT students called LabSix, wanted a trick that would work more reliably.

Adversarial Objects

They intended to show that not only still images but also real-world objects could be made "adversarial" to the network's pattern recognition. For this, they designed an algorithm to create 2D printouts and 3D-printed models with qualities that produce "targeted misclassification" whatever angle they are viewed from, even when blurred due to rapid camera movement.

In other words, they were able to make not just still images but real-world objects which could reliably fool neural networks. To test their creation, the team produced a turtle which the network thought was a rifle, and a baseball it misidentified as an espresso. They even tested the objects against different backgrounds. Putting the "rifle" against a watery backdrop didn't spoil the trick; nor did placing the "espresso" in a baseball mitt. According to the paper, the trick seems to work regardless of visual context.

In a world where we increasingly rely on neural networks for intricate and involved tasks, should we worry that they can be fooled like this? Aside from running Google's image search, the LabSix group point out that these networks are used in "high risk, real world systems". As humans, we are susceptible to certain optical illusions. Perhaps we shouldn't be surprised that neural networks are not so invulnerable either.

Comments (0)

Share your thoughts and join the technology debate!

No comments yet

Be the first to share your thoughts!